Mnemora

Local-first, privacy-focused personal context engine that lets you chat with your documents using offline AI models

About Mnemora

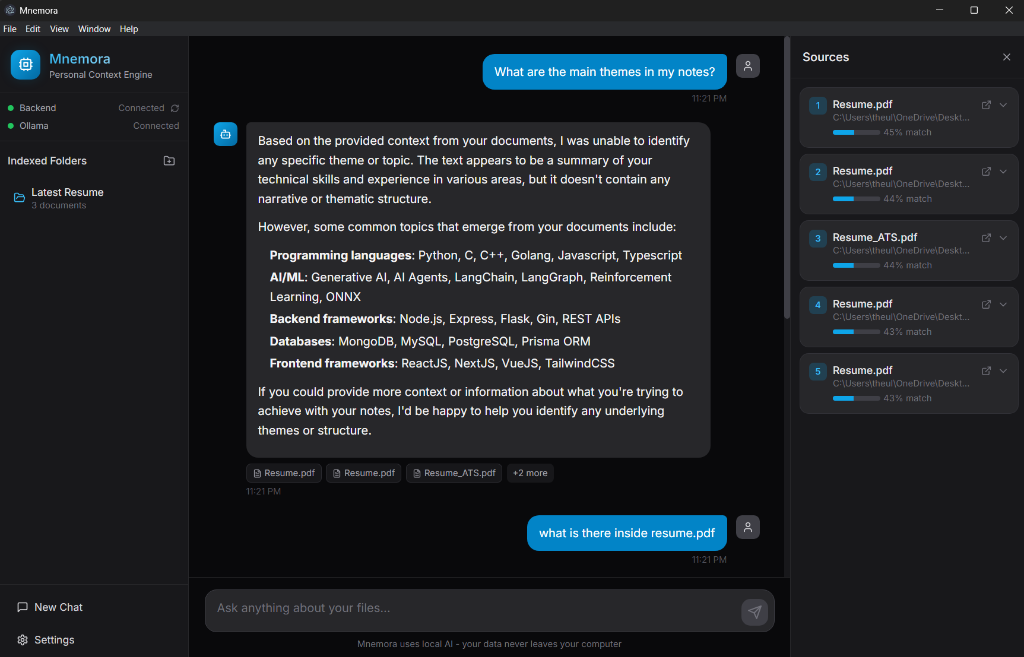

Mnemora is a local-first, privacy-focused personal context engine that revolutionizes how you interact with your documents. Unlike cloud-based solutions, Mnemora processes everything locally using Ollama, ensuring your data never leaves your computer. The application supports multiple document types including Markdown (with Obsidian wiki-links, tags, and YAML frontmatter), PDFs (with full text extraction and page markers), plain text files, and code files (with language detection and structure extraction). It uses ChromaDB as a high-performance vector database for semantic search, allowing you to find information across thousands of documents using natural language queries. Every answer includes smart citations showing exactly which documents contributed to the response, along with relevance scores. The application features a modern, dark-themed interface built with React and Tailwind CSS, streaming responses for real-time AI generation, and is fully extensible as an open-source project.

Powerful Features

Everything you need to succeed, all in one powerful platform

100% Private - All processing happens locally, no cloud, no data sharing

Index Any Folder - Add folders containing documents and let Mnemora understand them

Natural Chat Interface - Ask questions in plain English and get intelligent answers

Smart Citations - See exactly which documents contributed to each answer

Relevance Scoring - Visual indicators show how relevant each source is

Streaming Responses - See AI responses as they're generated in real-time

Multiple Document Types - Support for Markdown, PDF, plain text, and code files

Semantic Search - Find information across thousands of documents using natural language

Beautiful UI - Modern, dark-themed interface built with React and Tailwind CSS

Extensible - Open source, well-documented, and easy to customize

Powered by Ollama - Local LLM inference with models like Llama 3.2, Mistral, CodeLlama

ChromaDB Integration - High-performance vector database for semantic search

FastAPI Backend - Modern Python backend with async support

Cross-Platform - Electron-based desktop application for Windows, macOS, and Linux

Markdown Support - Obsidian [[wiki-links]], #tags, YAML frontmatter

PDF Support - Full text extraction with page markers

Code Support - Language detection and structure extraction for multiple programming languages

Common Questions

Everything you need to know about Mnemora

Ready to Contribute?

Check out Mnemora on GitHub and start contributing to the open source community